< Back to Our Blog

- Machine Learning With Bsl Demo Mac Os 11

- Machine Learning With Bsl Demo Mac Os Download

- Machine Learning With Bsl Demo Mac Os X

We would like to show you a description here but the site won't allow us. Best Machine Learning Software for Mac. Best Machine Learning Software for Mac. Find the best Best Machine Learning Software for Mac. Compare product reviews and features to build your list. Filter (28) Products. Cnvrg.io is an AI OS for machine learning.

Every company is sucking up data scientists and machine learning engineers. You usually hear that serious machine learning needs a beefy computer and a high-end Nvidia graphics card. While that might have been true a few years ago, Apple has been stepping up its machine learning game quite a bit. Let's take a look at where machine learning is on macOS now and what we can expect soon.

2019 Started Strong

More Cores, More Memory

The new MacBook Pro's 6 cores and 32 GB of memory make on-device machine learning faster than ever.

Depending on the problem you are trying to solve, you might not be using the GPU at all. Scikit-learn and some others only support the CPU, with no plans to add GPU support.

eGPU Support

If you are in the domain of neural networks or other tools that would benefit from GPU, macOS Mojave brought good news: It added support for external graphics cards (eGPUs).

(Well, for some. macOS only supports AMD eGPUs. This won't let you use Nvidia's parallel computing platform CUDA. Nvidia have stepped into the gap to try to provide eGPU macOS drivers, but they are slow to release updates for new versions of macOS, and those drivers lack Apple's support.)

Neural Engine

2018's iPhones and new iPad Pro run on the A12 and A12X Bionic chips, which include an 8-core Neural Engine. Apple has opened the Neural Engine to third-party developers. The Neural Engine runs Metal and Core ML code faster than ever, so on-device predictions and computer vision work better than ever. This makes on-device machine learning usable where it wouldn't have been before.

Experience Report

I have been doing neural network training on my 2017 MacBook Pro using an external AMD Vega Frontier Edition graphics card. I have been amazed at macOS's ability to get the most out of this card.

PlaidML

To put this to work, I relied on Intel's PlaidML. PlaidML supports Nvidia, AMD, and Intel GPUs. In May 2018, it even added support for Metal. I have taken Keras code written to be executed on top of TensorFlow, changed Keras's backend to be PlaidML, and, without any other changes, I was now training my network on my Vega chipset on top of Metal, instead of OpenCL.

What about Core ML?

Why didn't I just use Core ML, an Apple framework that also uses Metal? Because Core ML cannot train models. Once you have a trained model, though, Core ML is the right tool to run them efficiently on device and with great Xcode integration.

Metal

(Well, for some. macOS only supports AMD eGPUs. This won't let you use Nvidia's parallel computing platform CUDA. Nvidia have stepped into the gap to try to provide eGPU macOS drivers, but they are slow to release updates for new versions of macOS, and those drivers lack Apple's support.)

Neural Engine

2018's iPhones and new iPad Pro run on the A12 and A12X Bionic chips, which include an 8-core Neural Engine. Apple has opened the Neural Engine to third-party developers. The Neural Engine runs Metal and Core ML code faster than ever, so on-device predictions and computer vision work better than ever. This makes on-device machine learning usable where it wouldn't have been before.

Experience Report

I have been doing neural network training on my 2017 MacBook Pro using an external AMD Vega Frontier Edition graphics card. I have been amazed at macOS's ability to get the most out of this card.

PlaidML

To put this to work, I relied on Intel's PlaidML. PlaidML supports Nvidia, AMD, and Intel GPUs. In May 2018, it even added support for Metal. I have taken Keras code written to be executed on top of TensorFlow, changed Keras's backend to be PlaidML, and, without any other changes, I was now training my network on my Vega chipset on top of Metal, instead of OpenCL.

What about Core ML?

Why didn't I just use Core ML, an Apple framework that also uses Metal? Because Core ML cannot train models. Once you have a trained model, though, Core ML is the right tool to run them efficiently on device and with great Xcode integration.

Metal

GPU programming is not easy. CUDA makes managing stuff like migrating data from CPU memory to GPU memory and back again a bit easier. Metal plays much the same role: Based on the code you ask it to execute, Metal selects the processor best-suited for the job, whether the CPU, GPU, or, if you're on an iOS device, the Neural Engine. Metal takes care of sending memory and work to the best processor.

Many have mixed feelings about Metal. But my experience using it for machine learning left me entirely in love with the framework. I discovered Metal inserts a bit of Apple magic into the mix.

Telengard mac os. When training a neural network, you have to pick the batch size, and your system's VRAM limits this. The number also changes based on the data you're processing. With CUDA and OpenCL, your training run will crash with an 'out of memory' error if it turns out to be too big for your VRAM.

When I got to 99.8% of my GPU's available 16GB of RAM, my model wasn't crashing under Metal the way it did under OpenCL. Instead, my Python memory usage jumped from 8GB to around 11GB.

When I went over the VRAM size, Metal didn't crash. Instead, it started using RAM.

This VRAM management is pretty amazing.

While using RAM is slower than staying in VRAM, it beats crashing, or having to spend thousands of dollars on a beefier machine.

Training on My MBP

The new MacBook Pro's Vega GPU has only 4GB of VRAM. Metal's ability to transparently switch to RAM makes this workable.

Whats in your mouth bella? mac os. I have yet to have issues loading models, augmenting data, or training complex models. I have done all of these using my 2017 MacBook Pro with an eGPU.

I ran a few benchmarks in training the 'Hello World' of computer vision, the MNIST dataset. The test was to do 3 epochs of training:

- TensorFlow running on the CPU took about 130 seconds an epoch: 1 hour total.

- The Radeon Pro 560 built into the computer could do one epoch in about 47 seconds: 25 minutes total.

- My AMD Vega Frontier Edition eGPU with Metal was clocking in at about 25 seconds: 10 minutes total.

5dimes payout review. You'll find a bit more detail in the table below.

3 Epochs training run of the MNIST dataset on a simple Neural Network

| Average per Epoch | Total | Configuration |

|---|---|---|

| 130.3s | 391s | TensorFlow on Intel CPU |

| 47.6s | 143s | Metal on Radeon Pro 560 (Mac's Built in GPU) |

| 42.0s | 126s | OpenCL on Vega Frontier Edition |

| 25.6s | 77s | Metal on Vega Frontier Edition |

| N/A | N/A | Metal on Intel Graphics HD (crashed – feature was experimental) |

Looking Forward

Thanks to Apple's hard work, macOS Machine Learning is only going to get better. Learning speed will increase, and tools will improve.

TensorFlow on Metal

Apple announced at their WWDC 2018 State of the Union that they are working with Google to bring TensorFlow to Metal. I was initially just excited to know TensorFlow would soon be able to do GPU programming on the Mac. However, knowing what Metal is capable of, I can't wait for the release to come out some time in Q1 of 2019. Factor in Swift for TensorFlow, and Apple are making quite the contribution to Machine Learning.

Create ML

Not all jobs require low-level tools like TensorFlow and scikit-learn. Apple released Create ML this year. It is currently limited to only a few kinds of problems, but it has made making some models for iOS so easy that, with a dataset in hand, you can have a model on your phone in no time.

Turi Create

Create ML is not Apple's only project. Turi Create provides a bit more control than Create ML, but it still doesn't require the in-depth knowledge of Neural Networks that TensorFlow would need. Turi Create is well-suited to many kinds of machine learning problems. It does a lot with transfer learning, which works well for smaller startups that need accurate models but lack the data needed to fine-tune a model. Version 5 added GPU support for a few of its models. They say more will support GPUs soon.

Unfortunately, my experience with Turi Create was marred by lots of bugs and poor documentation. I eventually abandonded it to build Neural Networks directly with Keras. But Turi Create continues to improve, and I'm very excited to see where it is in a few years.

Machine Learning With Bsl Demo Mac Os 11

Conclusion

It's an exciting time to get started with Machine Learning on macOS. Tools are getting better all the time. You can use tools like Keras on top of PlaidML now, and TensorFlow is expected to come to Metal later this quarter (2019Q1). There are great eGPU cases on the market, and high-end AMD GPUs have flooded the used market thanks to the crypto crash.

We'd love to hear from you

From training to building products, companies of all sizes trust us with transforming their project vision into reality.

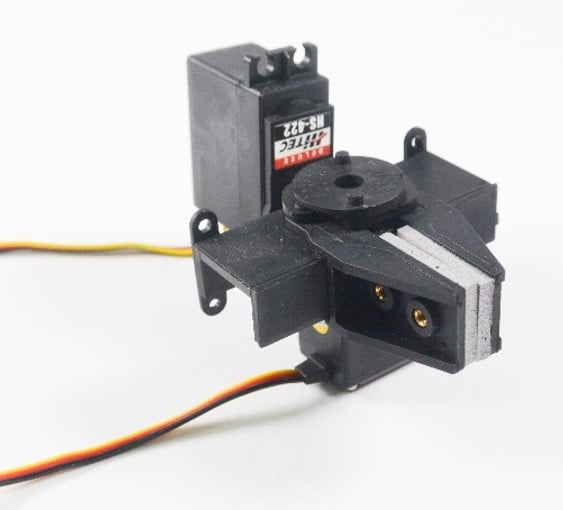

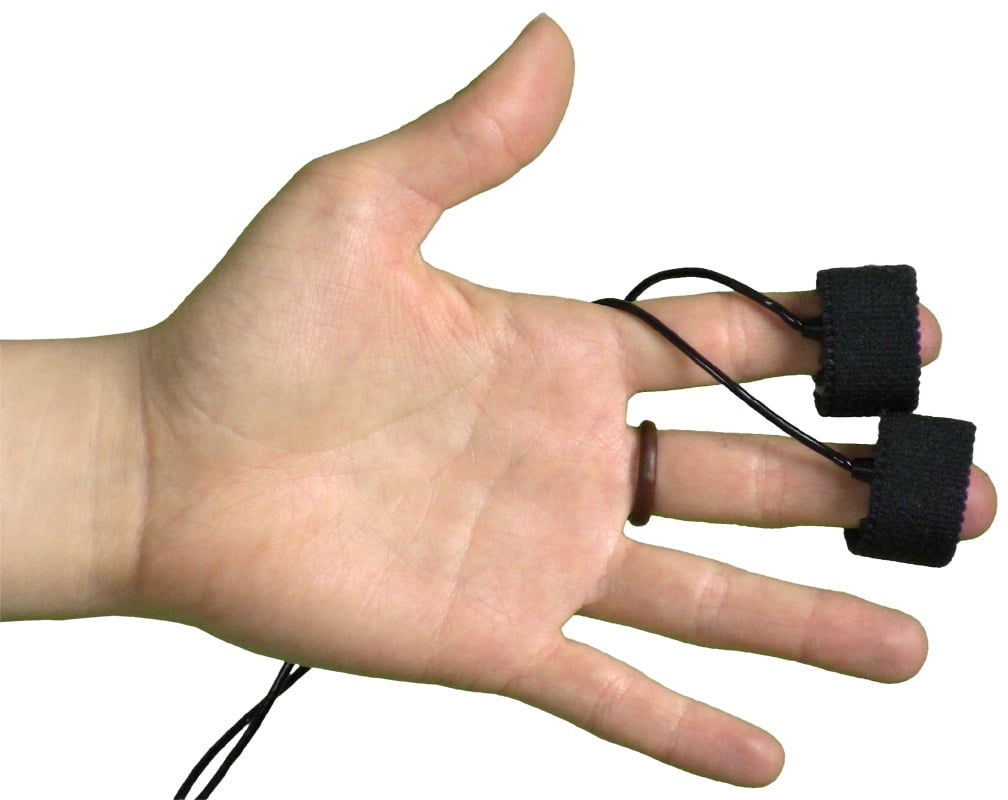

This project attempts to create a tutorial program demo that offer opportunity for users to learn and practice British Sign Language (BSL) vocabulary. I had wanted to develop a machine learning program that could take advantage of BSL's expressive nature to create an artistic image.

Please note that input from Leap Motion is glitchy so it may take several tries to get it to recognise your handshape (sign) correctly.

| Status | Prototype |

| Platforms | Windows, macOS |

| Release date | Jun 23, 2019 |

| Author | UnknownBot |

| Genre | Educational |

| Tags | british-sign-language, bsl, Leap Motion, machine-learning, processing, student-project, wekinator |

Install instructions

You will need to have a leap motion, and have Processing (https://processing.org/) and Wekinator (http://www.wekinator.org/) installed first to play this. If not, you can still have a look at one of the Processing file called 'Output_10_Classes_DTW' to get an idea of what this project is like.

- Open both Processing programs:

- ‘Inputs_21_DTW'

- Output_10_Classes_DTW'.

- Open the software Wekinator-Kadenze.

- Click on the button ‘Done'.

- Load the Project named ‘Wekinator.wekproj' which can be found inside the folder ‘Wekinator'.

- Start listening on port 6448.

- Click on ‘Run' button.

- Run both Processing programs open.

- Put them side to side.

- Connect the Leap Motion.

- Ensure that Leap Motion is in Desktop mode

- Ensure that the green light is facing toward you.

- Click on any of 5 buttons offered within UI window (Output_10_Classes_DTW) to see a video clip of a BSL sign.

- Ensure that Leap Motion window (Inputs_21_DTW) is clicked on before attempting to sign.

- While signing, have a look at bottom of UI window to see what it is you're signing.

Machine Learning With Bsl Demo Mac Os Download

Good luck!

Danmaku kingdom mac os.

Machine Learning With Bsl Demo Mac Os X

Download

Log in with itch.io to leave a comment.